Robust logical isolation using AWS STS Scope Down Policies

We explore approaches to tenant isolation in AWS. Including a terraform / boto3 demo for hardened tenant isolation in a SaaS environment.

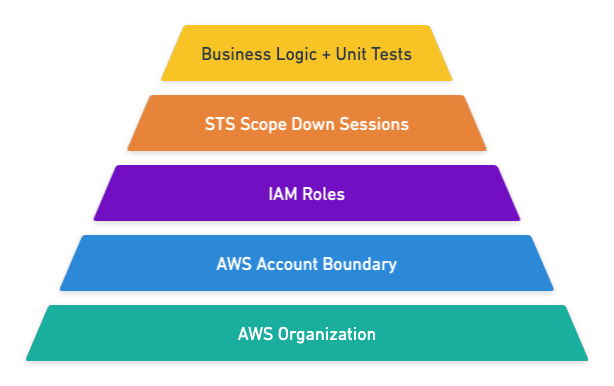

We will refer to this pyramid throughout the article. This is not an official AWS diagram, just my opinion encoded in a pyramid which makes it look more legit.

AWS Organizations and Accounts

The AWS Account boundary is the ultimate in logical isolation. If you have the luxury of using a new AWS Account for each client or workload then you should consider this. An often overlooked benefit of this approach is that you also gain a discrete "bucket" of API limits, so as well as the logical isolation of data and resources you also gain some resilience for that workload where a noisy tenant cannot erode the service limits for the entire account.

Managing multiple AWS accounts can be onerous, the tooling and services to enable this are improving but you will need a high level of automation to effectively manage this, you will need to be able to vend new accounts and configure services like Config and Cloudtrail to aggregate logs into a security account. You will also need automation to deploy permission sets into the target accounts which is the modern way to access multiple accounts via AWS Identity Center.

AWS IAM Roles

For workloads where you could have dozens or hundreds of tenants you are probably not going to go with the workload-per-account approach. But you still have the luxury of creating resources per tenant. e.g you could have a terraform module that encapsulates:

IAM roles

Lambda Functions

S3 Buckets

Step Functions

module "workload_client_a" {

source = "app.terraform.io/acme/workload/aws"

version = "0.0.2"

name = "client-a"

workload = "ml"

}

module "workload_client_b" {

source = "app.terraform.io/acme/workload/aws"

version = "0.0.2"

name = "client-b"

workload = "ml"

}

You can cookie cutter this pattern into the account and enforce robust logical isolation, role A can only access bucket A, Step Function A can only invoke Lambda function A etc. This gives you a great level of comfort and defense in depth, it is very hard to cross the streams.

What about SaaS Workloads

For SaaS workloads you could have thousands (hopefully!) of tenants, the previous approaches we have examined quickly break down. You will run into practical limits with IAM such as the number of IAM roles you can have, you could also experience api throttling when attempting to create large volumes of IAM resources. The level of maturity and complexity in your automation to effectively vend and manage thousands of IAM resources is also very high.

Business Logic + Unit Tests

What can we do? One approach I have seen a lot is to do away with the defense in depth provided by IAM and just have one role. e.g my_webserver_role You then rely on business logic and unit tests to provide the logical isolation. This is essentially what's going on in the bowels of a modern web framework such as Rails or Django where some authorization logic + the ORM return the data you should have access to.

{

"Version": "2012-10-17",

"Statement": [

{

"Action": "s3:Get*",

"Effect": "Allow",

"Resource": "arn:aws:s3:::my-data-bucket/*"

},

{

"Action": "s3:ListBucket*",

"Effect": "Allow",

"Resource": "arn:aws:s3:::my-data-bucket"

}

]

}

However, this makes me uncomfortable, especially coming from the role-per-tenant world. You are an out-by-one error away from logical isolation failure. Is there anything we can do here? Can we have our cake and eat it?

AWS STS Session Policies

I first learned about the concept of Scope Down policies when working with the AWS Transfer Service. They are solving a similar problem where the AWS Transfer service itself requires a role with access to the bucket, but we want each tenant to only have access to a sub-key within that bucket. This is achieved using a session policy which is evaluated in real-time, the access granted is the intersection of the permissions provided by the role assigned to AWS Transfer and the session policy itself. Very powerful!

The STS service also provides this functionality! What I mean by that is instead of just assuming a role and inheriting that role's permissions, you can provide a session policy during the assume_role operation, and the session returned is now "scoped down" to the intersection of the role's policies and the session policy. This protection is provided at the IAM level, so you are providing a level of defense in depth that will protect you from business logic errors or horizontal privilege escalation attempts.

Theres a full demo repo at the bottom of this article. Lets look at how you could utilize session policies to add another layer of hardening to your tenant isolation.

Imagine the policy above was attached to a role called app_role. We have a lambda function configured to use this role. If we create an s3 client in this app it just gets temporary credentials in the context of app_role. It can then access any key in the bucket and below we are accessing data for the tenant 123.

s3_client = boto3.client("s3")

response = s3_client.get_object(

Bucket="my-data-bucket",

Key="123/data.txt"

)

We have no protection here other than our business logic. Lets look at how we could change this.

We will remove the policy that allows access to s3 from app_role and instead we will create a new role called data_access_role

resource "aws_iam_role" "data_access_role" {

name = "data_access_role"

assume_role_policy = data.aws_iam_policy_document.access_role_trust.json

}

# The trust relationship allows the app_role to assume this role

data "aws_iam_policy_document" "access_role_trust" {

statement {

actions = [

"sts:AssumeRole"

]

principals {

type = "AWS"

identifiers = [

aws_iam_role.app_role.arn

]

}

}

}

data "aws_iam_policy_document" "data_access_policy" {

statement {

actions = ["s3:Get*"]

resources = ["${aws_s3_bucket.demo.arn}/*"]

}

statement {

actions = ["s3:ListBucket*"]

resources = [aws_s3_bucket.demo.arn]

}

}

resource "aws_iam_role_policy" "data_access_policy" {

role = aws_iam_role.data_access_role.id

policy = data.aws_iam_policy_document.data_access_policy.json

}

What have we done?

Removed data access from the app_role

Added this access to a new role called data_access_role

Configured the trust relationship of data_access_role to allow app_role to assume it.

There is now no way to access the my-data-bucket S3 bucket from this Lambda function, we must assume the data_access_role. And when we assume this role we will pass a session policy

# Scoped down to tenant 123 only

scope_down_policy = {

"Version": "2012-10-17",

"Statement": [

{

"Sid": "ScopeDown",

"Effect": "Allow",

"Action": ["s3:GetObject*"],

"Resource": [f"arn:aws:s3:::my-data-bucket/123/*"],

}

],

}

def get_session(assumed_role):

return Session(

aws_access_key_id=assumed_role["Credentials"]["AccessKeyId"],

aws_secret_access_key=assumed_role["Credentials"]["SecretAccessKey"],

aws_session_token=assumed_role["Credentials"]["SessionToken"],

)

def get_scoped_down_s3_client():

client = boto3.client("sts")

assumed_role = client.assume_role(

RoleArn=role_arn,

RoleSessionName="data_access_scope_down_test",

Policy=json.dumps(scope_down_policy),

)

return get_session(assumed_role).client("s3")

# Create a client in the context of a scoped down session

s3_client = get_scoped_down_s3_client()

# This will work

s3_client.get_object(

Bucket="my-data-bucket",

Key="123/data.txt"

)

# This will not work

s3_client.get_object(

Bucket="my-data-bucket",

Key="789/data.txt"

)

Now the s3_client has temporary credentials in the context of a session that is the intersection of the policies attached to the data_access_role and the session policy itself. This s3_client cannot "escape" the 123/ key.

You can use this technique to create boto3 sessions and api clients in the context of a single tenant, this gives you defense-in-depth, the IAM service will have your back. You could extend this example to provide granular access to:

Secrets Manager Secrets

Systems Manager Parameters

Item-level access in DynamoDB tables

Demo

https://github.com/conzy/sts-scope-down

Running the demo:

git clone git@github.com:conzy/sts-scope-down.git

cd sts-scope-down

terraform init

terraform apply

The demo creates all the resources mentioned in the article, the app_role, the data_access_role, the trust relationships, the s3 bucket with objects for tenant 123 and tenant 789. It also deploys a lambda function with a demo python app that implements the scope down policy. Its an ideal environment to experiment with.